Evidence AI Module

Visit onlineCase Study: Designing the Synnch Evidence AI Module

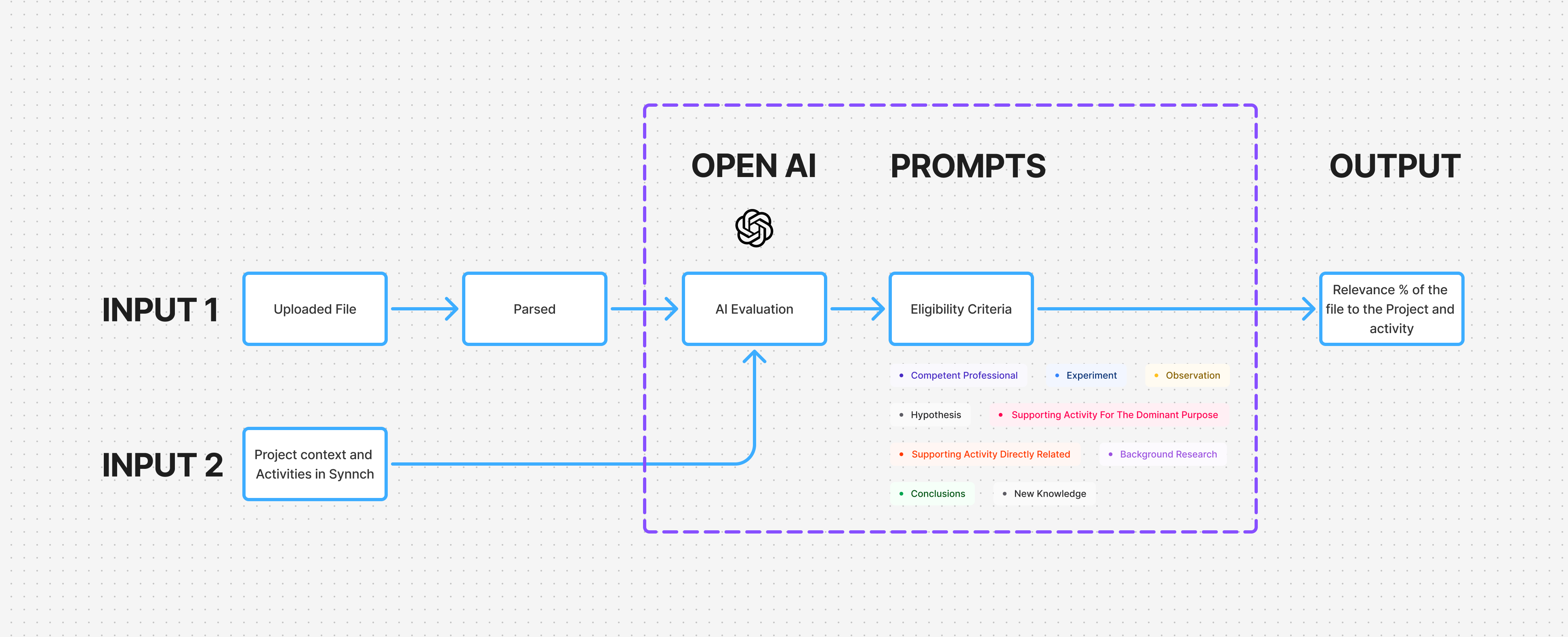

Uploaded text files are parsed using AI via our custom OpenAI integration, then intelligently matched to Projects and Activities within the Synnch R&D platform.

I. Introduction / The Challenge

Project Goal

Create a smart, user-friendly Evidence Module that works as a central hub for R&D files—enhanced with AI to analyze and score relevance against R&D tax criteria. The aim: to save time, increase clarity, and build trust.

Product Context

Synnch’s Evidence AI module lets consultants and claimants upload files—like reports, invoices, and notes—as evidence for R&D claims. Using criteria such as “New Knowledge,” “Experiment,” and “Hypothesis,” it automatically matches each file to the right project and activity in Synnch. This automated matching not only speeds up evidence review but also strengthens compliance by ensuring every piece of evidence is accurately linked to the appropriate R&D claim.

The Problem

Manually reviewing thousands of files is slow, expensive, and subjective. Consultants were overwhelmed, while claimants lacked visibility into how strong their evidence really was. Managing and organizing all this data through disconnected tools only added to the pain.

My Role

As the UI/UX Designer, I led the end-to-end design—covering upload flows, table layouts, AI score visualization, side panels, bulk actions, and managing AI inconsistencies. I collaborated closely with the CEO, product owner, and developers.

II. Discovery & Research / Understanding the User

Approach

- Deep-dive sessions with the CEO and PO

- Analysis of R&D compliance documents

- Competitive research on evidence and AI tools

- UI/UX exploration of scalable tables and AI feedback mechanisms

The Evidence module parses uploaded files and uses a custom OpenAI LLM to evaluate their relevance to project activities against 9 eligibility criteria—outputting a relevance percentage to help consultants and claimants assess claims faster, eliminate manual review, and save time.

💡 Key Insights

- Consultants needed speed: Fast uploads, bulk actions, and reliable AI assessments.

- Claimants needed transparency: Simple, clear explanations of AI scores and how they were calculated.

- AI needed simplification: Showing complex criteria breakdowns had to be digestible.

- Data challenges were real:Duplicate files, mixed sources, and unclear processing states needed clear UI handling.

- Consistency mattered: Aligning with Synnch’s design system would make the module feel like a natural extension of the platform.

III. Ideation & Design Exploration

Design Process

I explored table structures with progressive loading for AI scores, various score breakdown visualizations, and user-friendly upload paths. Multiple ideas were prototyped for managing duplicates, rematching AI suggestions, and simplifying bulk actions.

Key Iterations

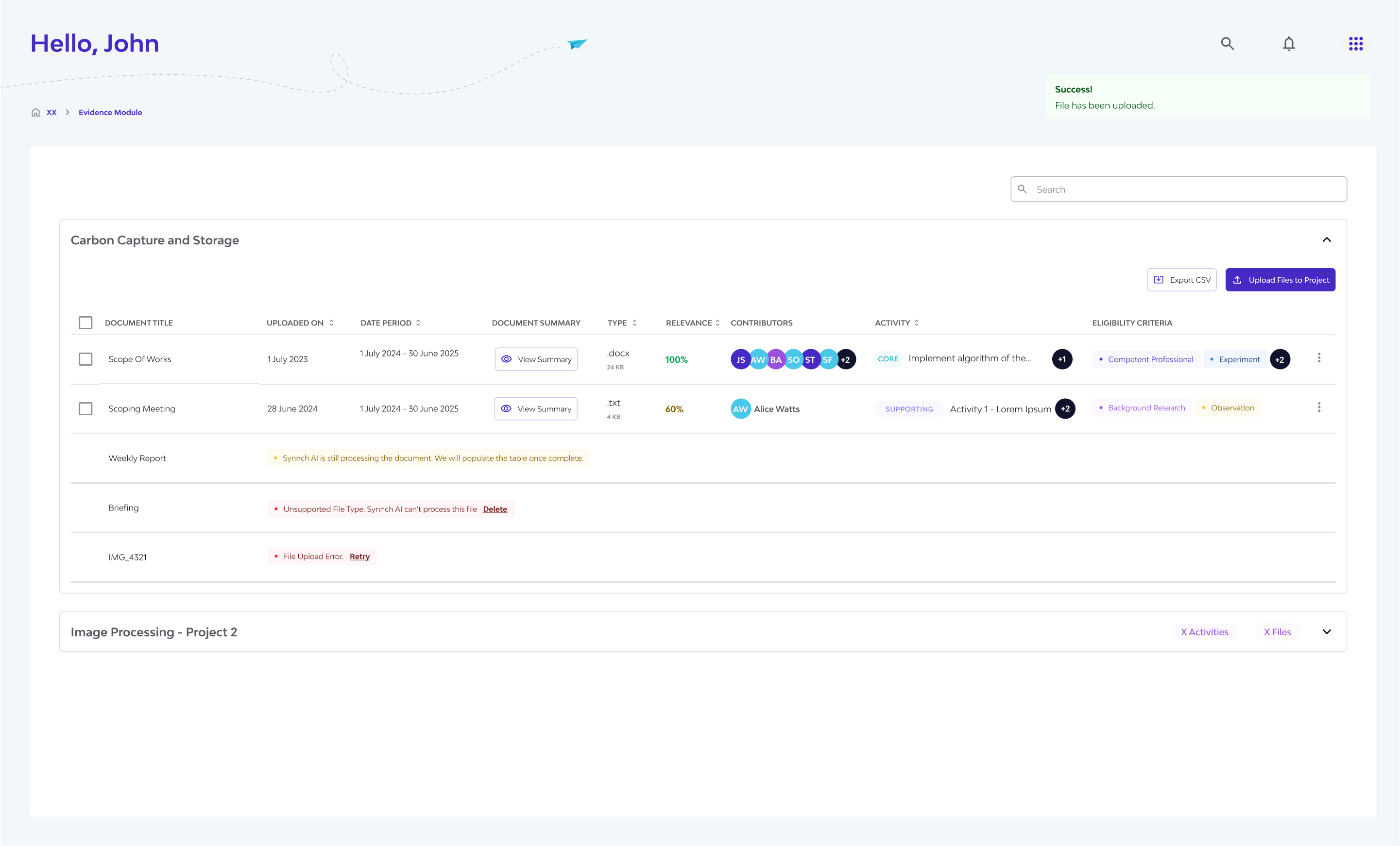

This was the initial design - more tabs, more steps. We simplified the Evidence AI flow by removing the file repository step—files are now uploaded and evaluated automatically within the project context.

Initial thoughts: I began by breaking down the process step by step and creating initial mockups. The first concept featured a multi-step wizard with several tabs and selection points. In this version, users would first upload files into a central "repository," and then manually select which documents to evaluate.

However, during early user feedback sessions, it became clear that this approach felt too complex and misaligned with the purpose of Synnch Evidence AI. It gave the impression of being a document management system rather than an AI-powered evaluation tool for R&D tax incentives.

To address this, we simplified the user flow. Now, files are uploaded directly within the context of a project or activity, and the system automatically evaluates them using our AI engine. This eliminated unnecessary steps, reduced confusion, and made the experience more intentional—transforming Evidence AI into a focused tool for assessing relevance against R&D criteria, rather than a general-purpose file storage system.

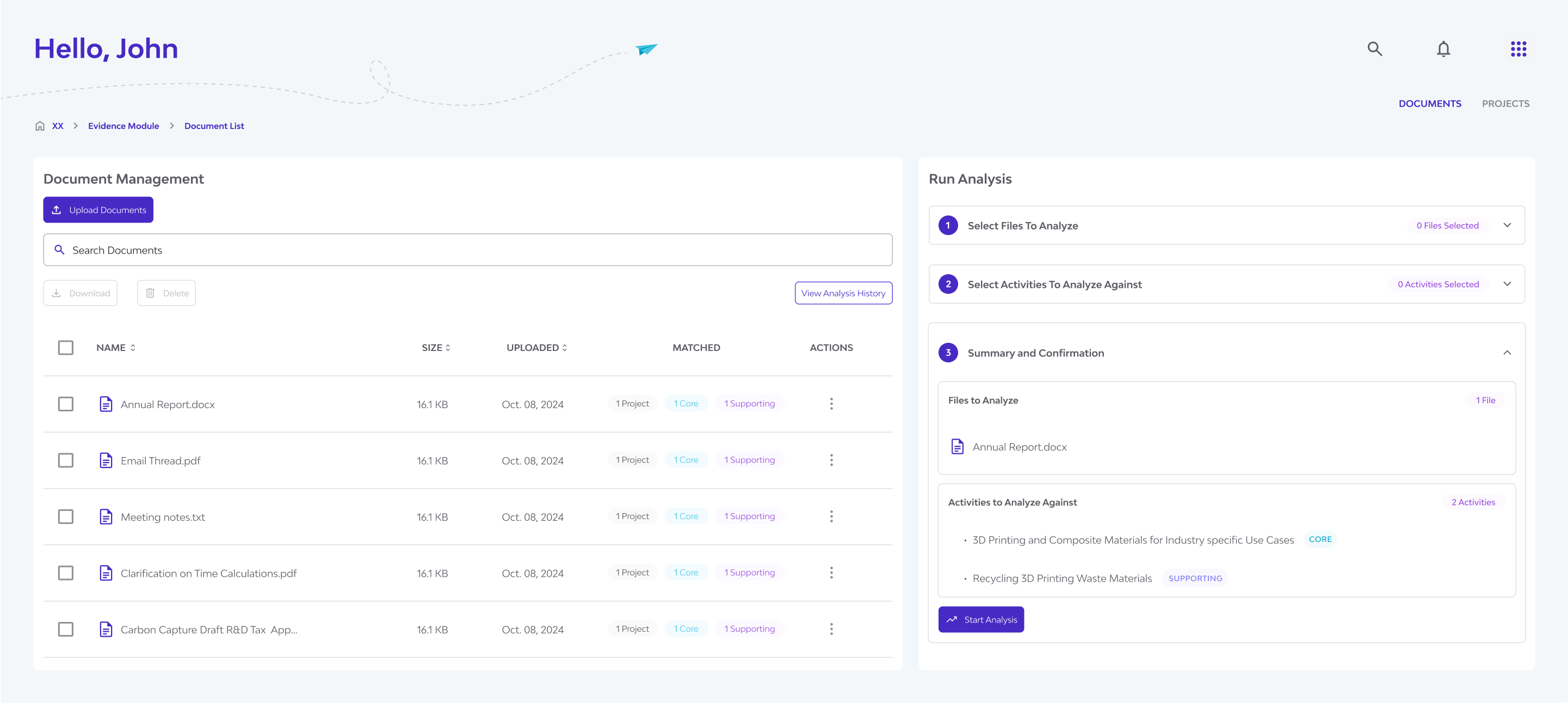

- Upload Flow: Simplified from a multi-step wizard to drag-and-drop uploads straight into project containers—instantly triggering AI analysis.

- Project Layout: Switched to collapsible accordions per project to prevent data overload and make it easier to scan.

- AI Output Display: Designed a clean summary in the table view, with detailed reasoning in the side panel—reducing cognitive load while preserving detail.

IV. The Solution / Design Execution

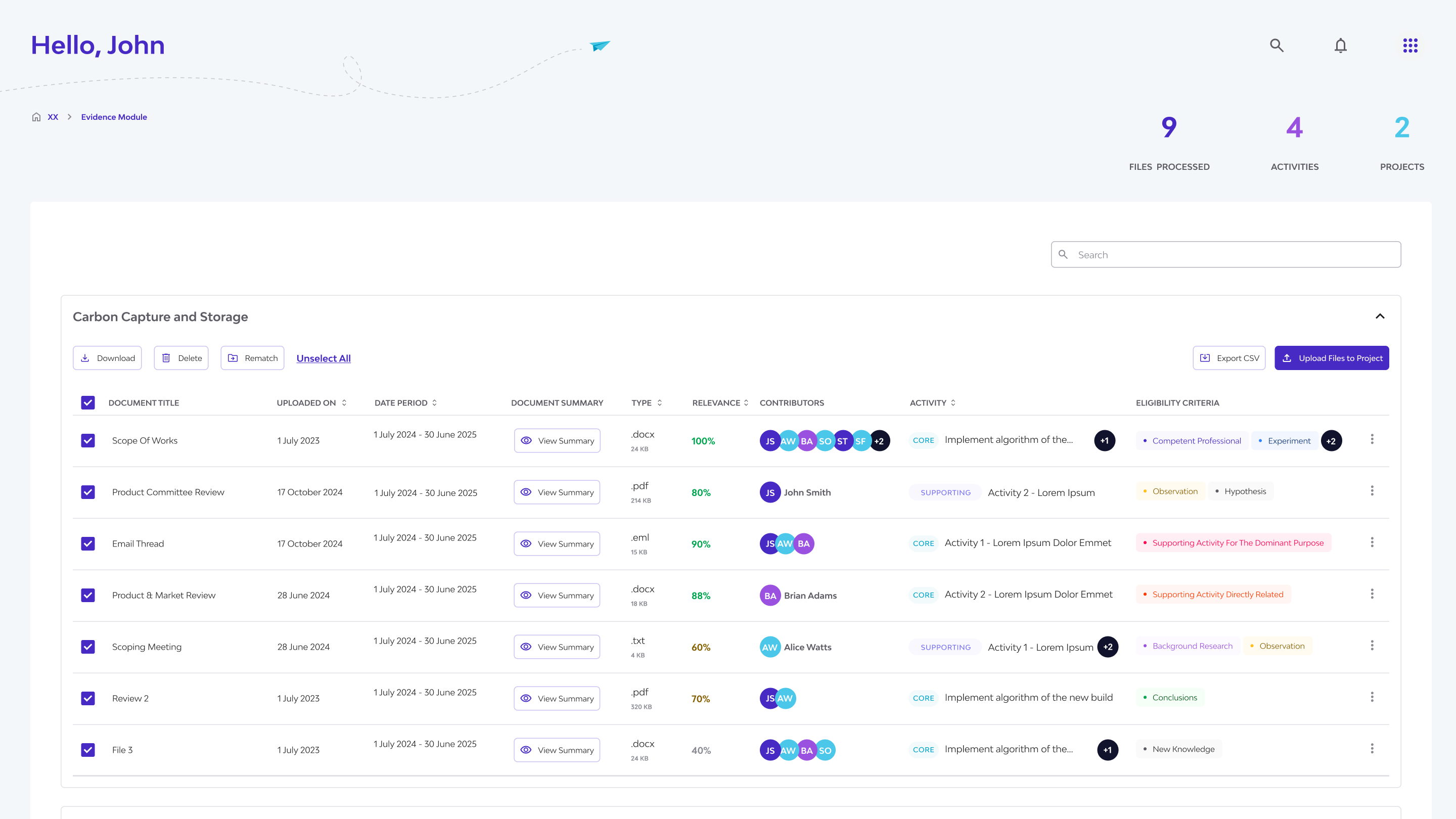

Central Evidence Table

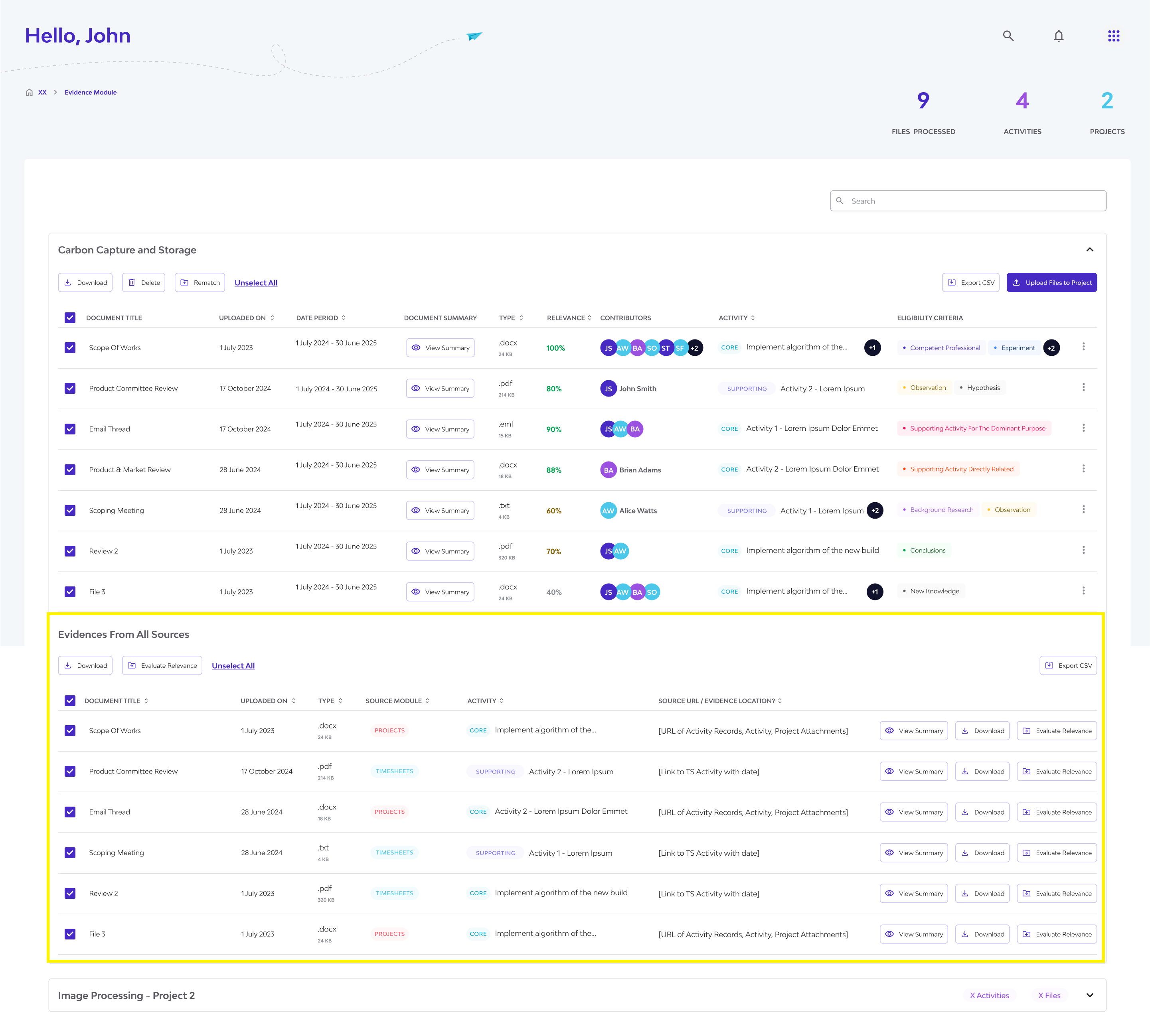

The implemented Evidence AI table presents only the most relevant details across 9 columns, with support for multi-file selection, bulk actions, and a side panel that reveals full document insights on row click—balancing depth with clarity.

- Files grouped by project in collapsible accordions

- Key data like File Name, AI Relevance Score, Matching Activities, and Eligibility Criteria summarized

- Visual indicators for upload and processing states

Clear AI Breakdown

- AI relevance score shown upfront

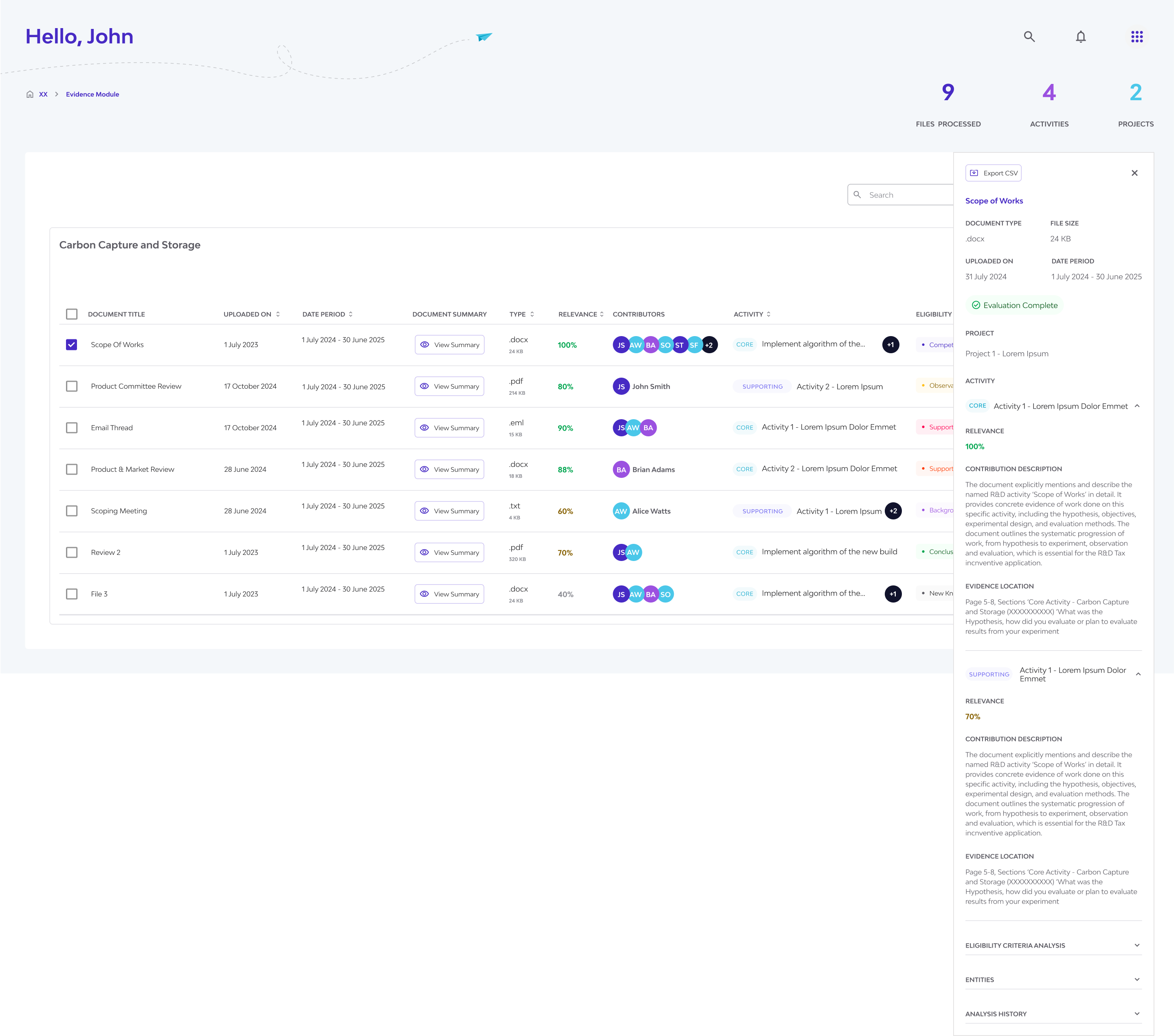

- Side panel displays full score breakdown by R&D criteria (e.g., Hypothesis, Experiment), with AI-generated reasoning clearly labeled

Side Panel for Context

The side panel provides a detailed view of each document, including parsed content, relevance breakdown, linked projects and activities, and evaluation against the 8 eligibility criteria—enabling quick, context-rich review without leaving the table.

The side panel provides a detailed view of each document, including parsed content, relevance breakdown, linked projects and activities, and evaluation against the 8 eligibility criteria—enabling quick, context-rich review without leaving the table.

- Matches other Synnch modules

- Includes file details, reasoning history, and editable fields

Streamlined Uploads

- ✅ Drag-and-drop files directly into project folders

- ✅ Auto-triggers AI analysis—no extra steps needed

Track each file’s processing stage—uploading, unsupported format, or pending analysis—with quick action buttons for next steps.

Track each file’s processing stage—uploading, unsupported format, or pending analysis—with quick action buttons for next steps.

Managing AI Challenges

- Duplicate uploads? Prompted.

- Inconsistencies? Logged with the option to revert.

- Bulk file actions available: Delete, Download, Rematch

- While testing the Evidence AI module, we found relevance scoring to be inconsistent—even with identical source files. Using controlled examples (90%, 50%, and 30% relevance), the AI still produced varied results. This reflects the evolving nature of AI, and such inconsistencies are expected as the technology continues to improve.

Efficiency Features

- CSV export for reports

- Progressive loading for smoother handling of large datasets

Adherence to Synnch UI kit ensured brand and interaction consistency

V. Validation & Iteration / Testing

Testing Approach

- Internal reviews with product and dev teams

- Usability tests simulating consultants and claimants

- Key flows tested: upload, AI review, filtering, bulk actions, and navigating projects

Findings

- Accordion layout fixed the scroll fatigue problem

- AI explanations needed slight refinements for clarity

- Bulk actions and upload process were intuitive and well-received

Refinements

- Adjusted modal content for better AI clarity

- Improved filtering controls based on feedback

- We're exploring the consolidation of all uploaded evidence from various modules into a single, centralized Evidence Module. This involves a planned file migration—an initiative significant enough to be treated as a standalone project. Stakeholders requested a unified table view for all files. I designed mockups to support this, allowing users to manually select which unprocessed files should be fed into the AI for analysis.

Unified Evidence Hub: A centralized table consolidating all uploaded files across modules, with manual selection for AI analysis and reference scoring.

Unified Evidence Hub: A centralized table consolidating all uploaded files across modules, with manual selection for AI analysis and reference scoring.

VI. Impact & Results

What We Delivered

An intuitive Evidence AI Module that saves time, adds clarity, and feels fully integrated with Synnch’s platform.

Expected Impact

- Cut consultant review time by over 50%

- Enabled claimants to better self-assess evidence

- Boosted consistency and objectivity in R&D claims

- Strengthened Synnch’s position as a full-service R&D platform

VII. Learnings & Reflection

What I Learned

- Transparency in AI is non-negotiable—users need to see what the AI did and why

- Distinguishing between AI content and user content builds trust

- UI should support AI’s strengths while accounting for its unpredictability

Challenges = Growth

- Designing for AI inconsistencies and duplicate handling required creative UI thinking

- Working closely with AI engineers helped bridge tech and design effectively

What’s Next

We’re exploring letting users run AI analysis on old files, and possibly combining the repository and AI views into one seamless interface.